Week Four for the Housing Team

AI-Driven Housing Evaluation for Rural Community Development

This blog outlines where the Housing Team is at after week four of DSPG. We have made a lot of progress in the past four weeks, and we are excited to share it with you!

Project Overview

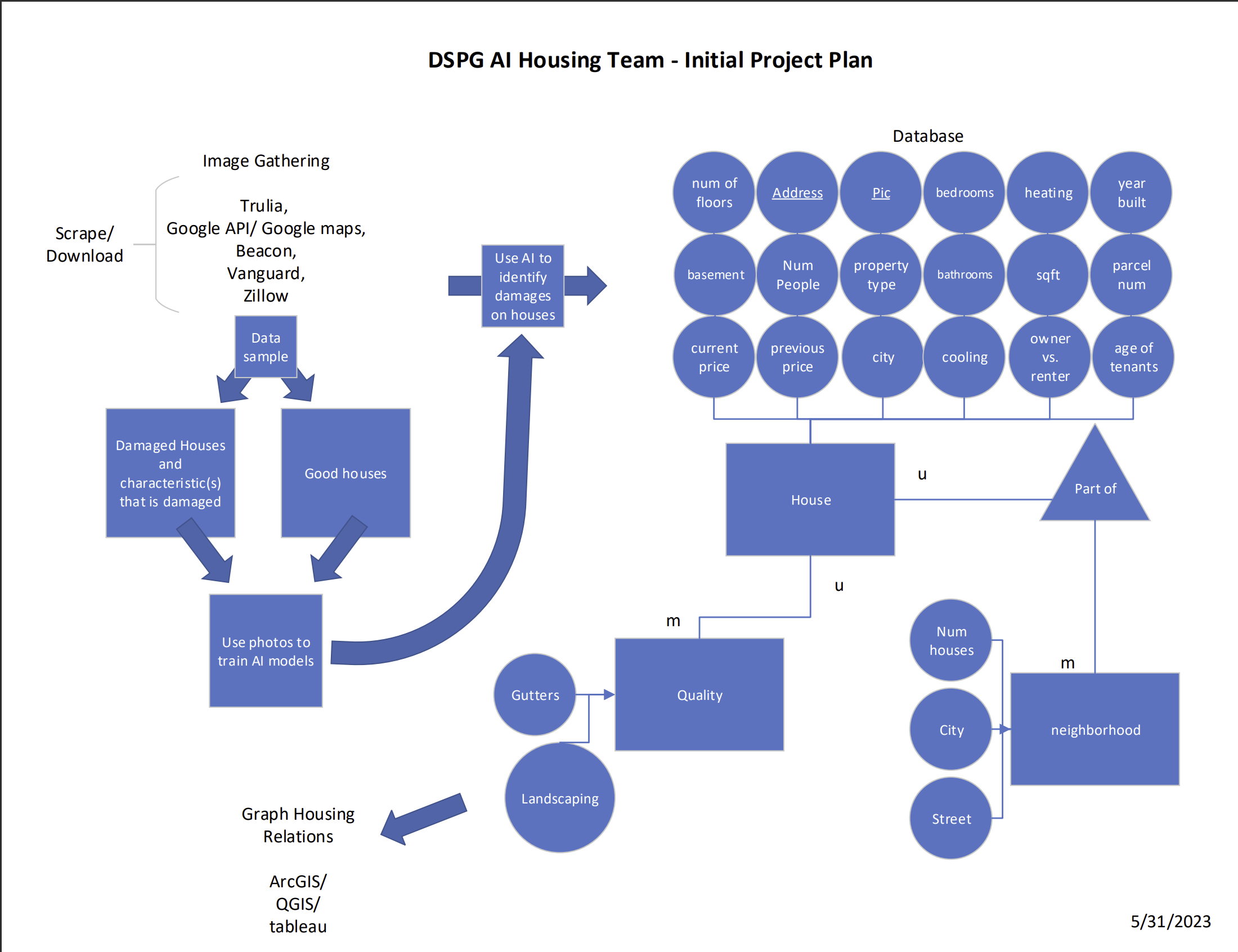

This is the project plan we came up with the first week of DSPG. This project is intended to span over three years with DPSG, and different interns will be working on it in the coming years. Thus, the project plan is ambitious for this summer.

Problem Statement

The absence of a comprehensive and unbiased assessment of housing quality in rural communities poses challenges in identifying financing gaps and effectively allocating resources for housing improvement. Consequently, this hinders the overall well-being and health of residents, impacts workforce stability, diminishes rural vitality, and undermines the economic growth of Iowa. Moreover, the subjective nature of evaluating existing housing conditions and the limited availability of resources for thorough investigations further compound the problem. To address these challenges, there is a pressing need for an AI-driven approach that can provide a more accurate and objective evaluation of housing quality, identify financing gaps, and optimize the allocation of local, state, and federal funds to maximize community benefits.

Utilizing web scraping techniques to collect images of houses from various assessor websites, an AI model can be developed to analyze and categorize housing features into good or poor quality. This can enable targeted investment strategies. It allows for the identification of houses in need of improvement and determines the areas where financial resources should be directed. By leveraging AI technology in this manner, the project seeks to streamline the housing evaluation process, eliminate subjective biases, and facilitate informed decision-making for housing investment and development initiatives in rural communities

Goals and Objectives

Generate Google Street View urls for Slater, Independence, Grundy Center, and New Hampton

Scrape available housing data for Slater, Independence, Grundy Center, and New Hampton

Zillow

Realtors.com

Beacon

Vanguard

Combine data frames

Create AI models

Our Progress

We have been making good progress to complete the goals and objectives we outlined above. Since the beginning of the Data Science for the Public Good Program, we have been expanding our knowledge of data science, particularly in areas that relate to this housing project. We have been learning and covering new concepts through Data Camp. We have also watched two webinars on TidyCensus training, as well as started creating AI Models to practice with.

Data Camp Training:

GitHub Concepts

AI Fundamentals

Introduction to R

Intermediate R

Introduction to the Tidyverse

Web Scraping in R

Introduction to Deep Learning with Keras

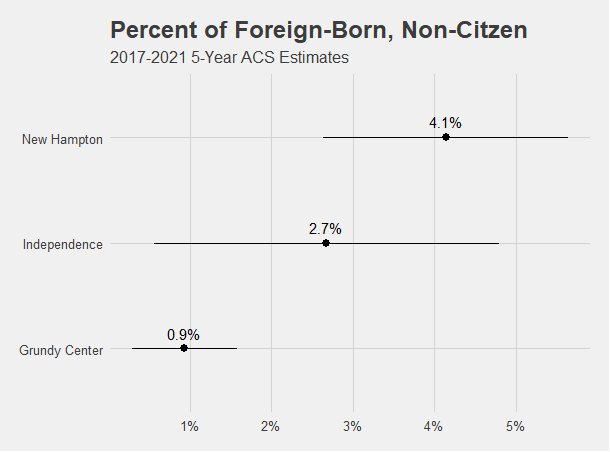

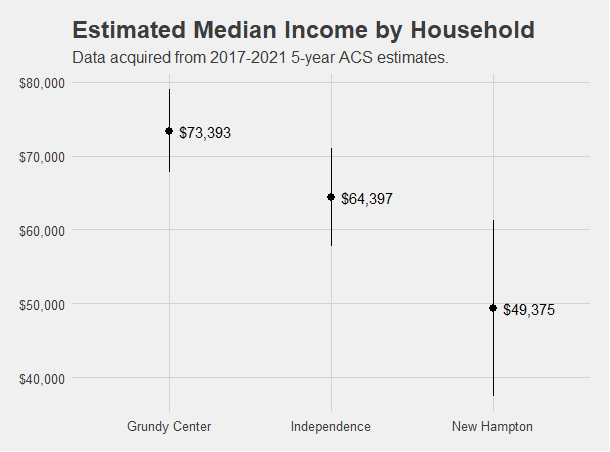

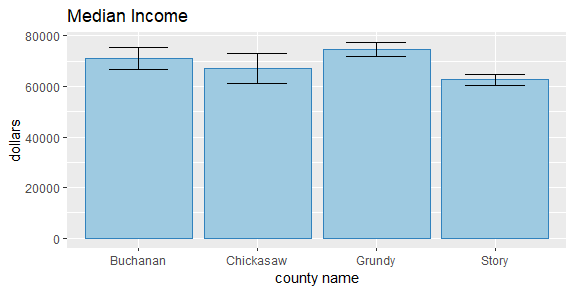

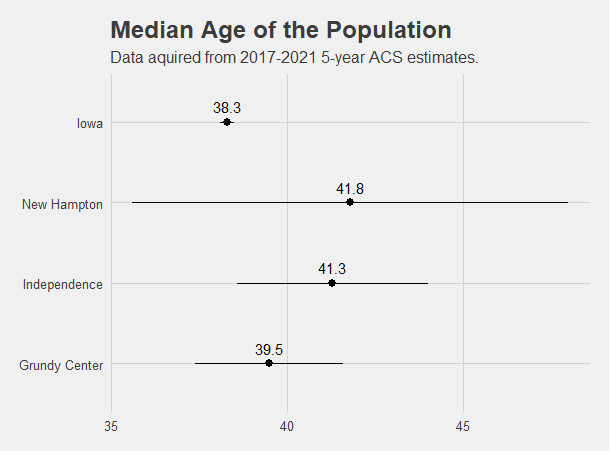

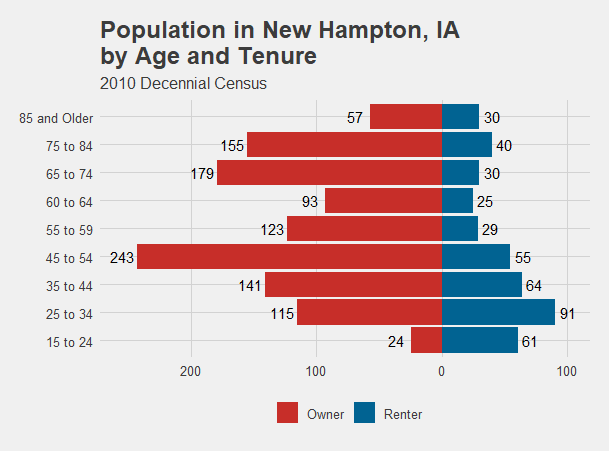

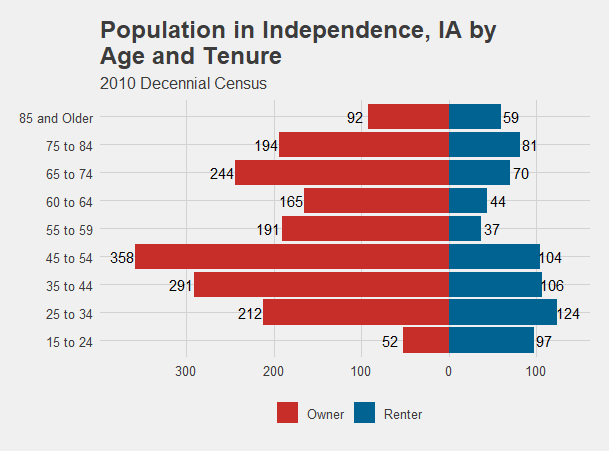

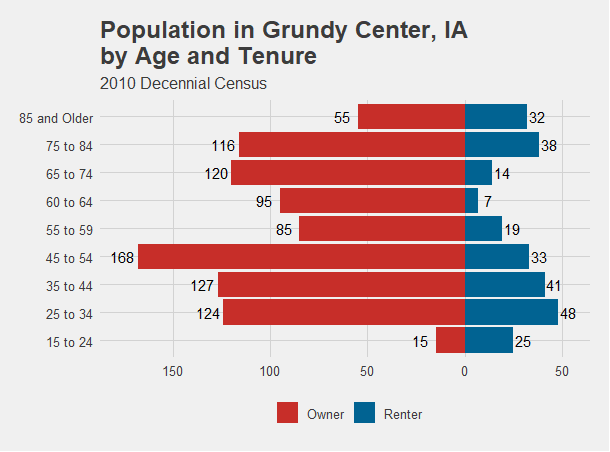

TidyCensus Demographic Data Collection:

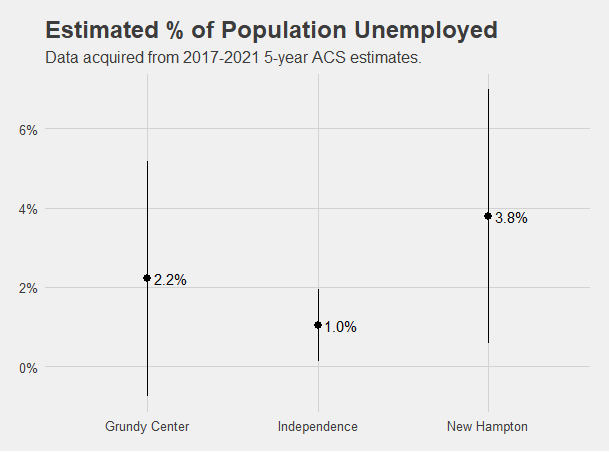

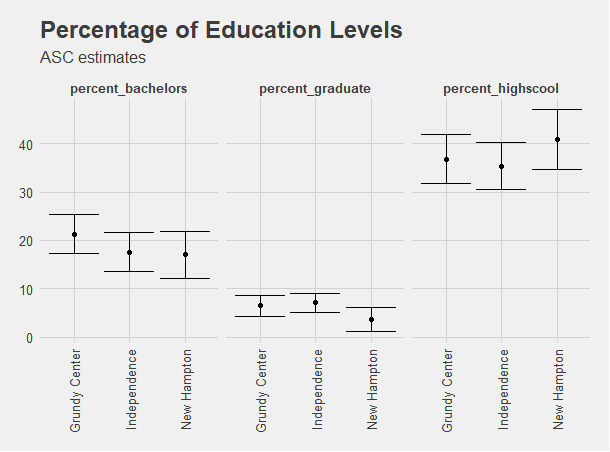

One of the first steps in our project was to explore the available demographic data in our selected cities and counties. We thought it valuable to understand the demographic data, and we have represented in the plots below:

Creating Test AI Models:

The next step was creating an AI Model. We decided to create an AI Model early in the project before finishing the housing data collection so that we had a better understanding when it came to putting everything together. The AI Model below tests for vegetation in front of houses.

.png)

.png)

.png)

.png)

.png)

.png)

This Week:

In-Person Data Collection

On Tuesday this week, the entire DSPG program went to Slater to practice data collection in person. The housing group took this as an opportunity to collect some housing photos on the ground to use in our AI Model later on.

Google Street View and URLs

We are getting the majority of our photos for the AI to use from Google Street View. Google has an API key that you can use to generate an image for a specific address. We spent the first half of this week pulling addresses from each of our cities and creating URLs to pull the images from Google Street View.

We ran into a couple of problems when doing this, the biggest of which is displayed in the images below. Because we are working with cities in rural areas, there is not Google Street View images available for every street in our cities.

.png)

.png)

.png)

.png)

Below is a sample from the tables we created containing the URLs to grab the images from Google Street View.

.png)

.png)

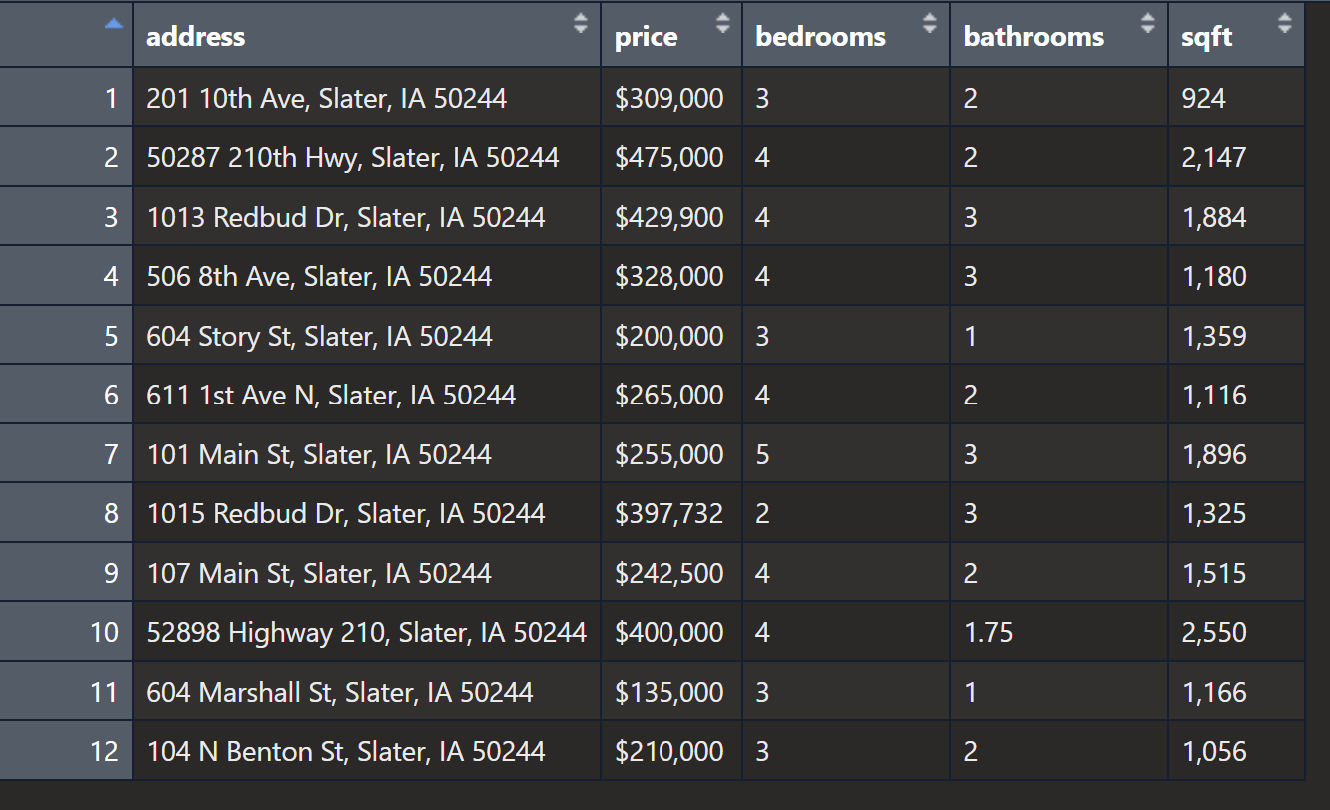

Web Scraping

Once we were finished collecting addresses and generating URLs, we moved on to scraping the web for more images. We decided to grab images from Zillow, Realtors.com, and the county assessor pages for our cities. We were able to successfully scrape images from Zillow this week.

.png)

Happies

Completed a succesful meeting with Erin Olson-Douglas

Finished collecting and creating URL addresses for Google Street View images

Since Zillow owns Trulia so we don’t have to web scrape both sites :)

Successfully scraped portions of data from Zillow !

Crappies

Web Scraping

Beacon and Vanguard have anti-web scraping protections

Angelina’s Excel doesn’t operate as expected

Future Plans and Next Steps

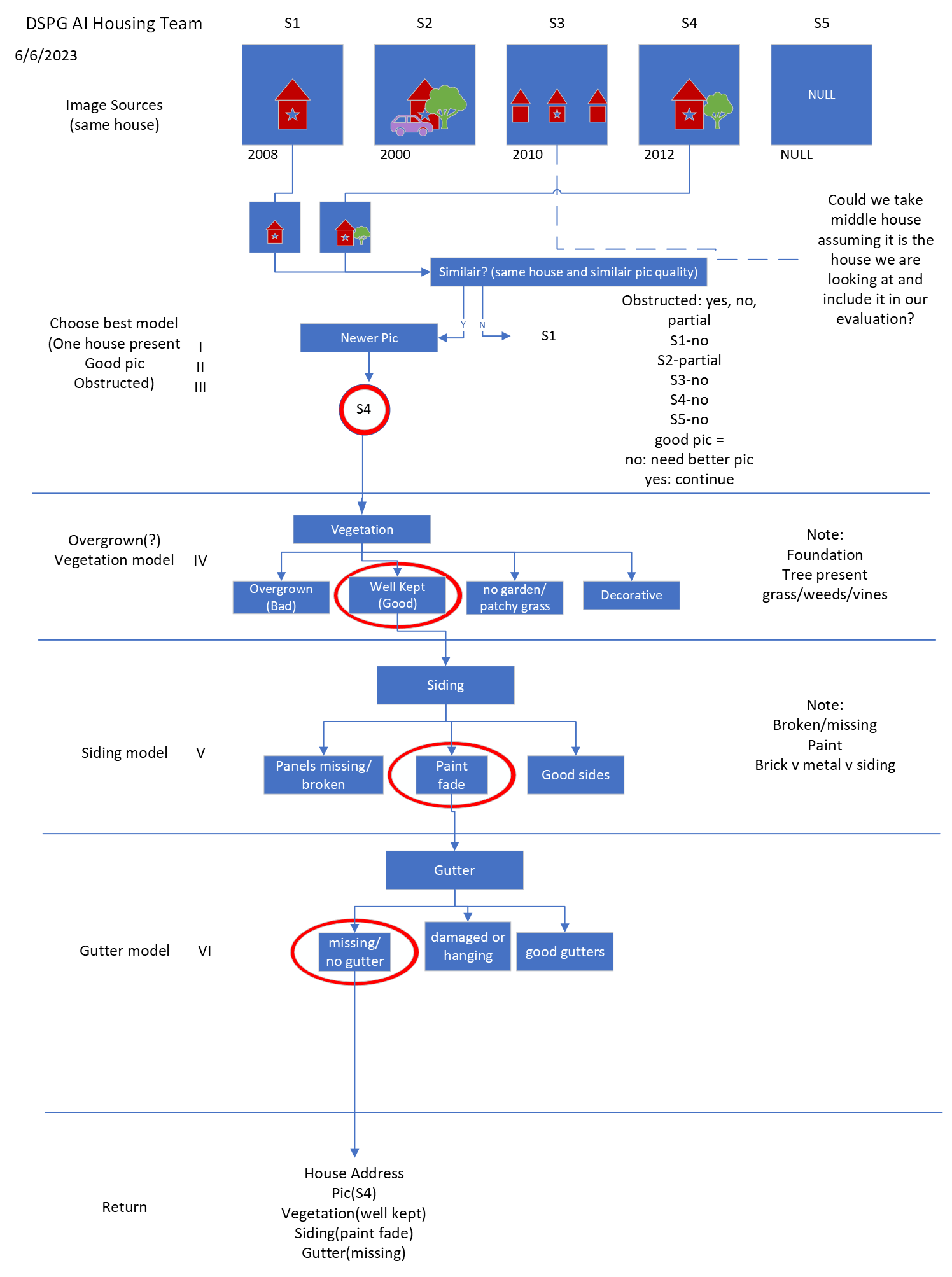

Once we are able to scrape enough images off of Zillow, Realtors.com, and the assessor pages, we will be able to move on with creating AI Models. The diagram below outlines how the AI Models will work in the next steps of out project.